Tutorial #10 – Filters and Photoconsistency

Understanding mesh and point cloud filters

Welcome to the 3DF Zephyr tutorial series.

In this tutorial, you will learn how to properly use point cloud filters, mesh filters, and Photoconsistency in 3DF Zephyr.

· Point Cloud Filters

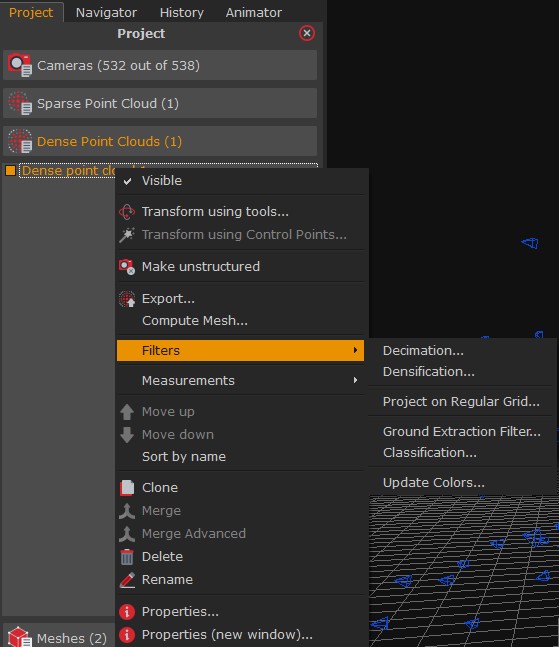

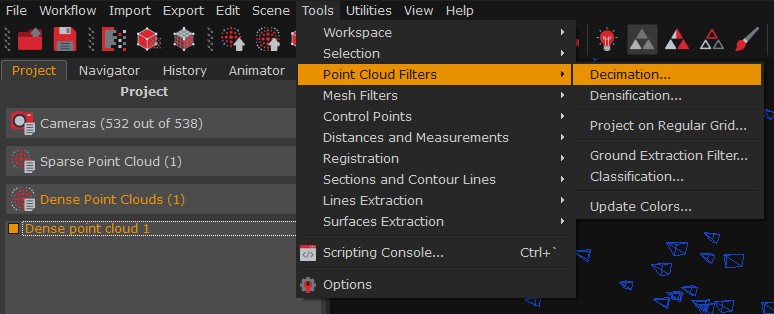

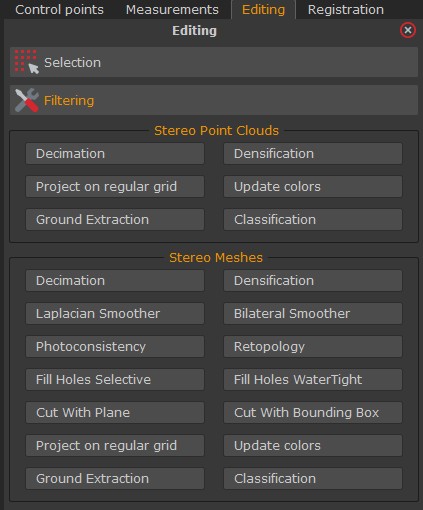

You can access the dense point cloud filters:

- By right-clicking the dense point cloud name in the Project panel and selecting the Filters option;

2. By clicking the Tools menu and selecting the “Point Cloud Filters” option;

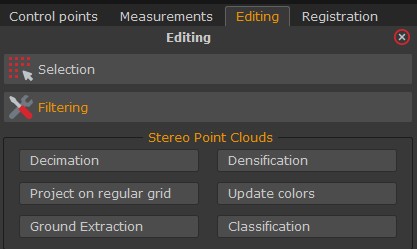

3. From the Stereo Point Clouds section in the Filtering module of the Editing panel.

Below you can find a description of the different filters:

Decimation: this filter allows you to select the desired point cloud and set the number of points you want to keep. The point cloud will be regenerated, decimating the point count to the maximum specified value.

It is possible to decimate the desired point cloud using these different methods:

– Maximum points count;

– Maximum points count with octree: an octree is a data structure ensuring a more homogeneous distribution of the decimated points;

– Average point distance;

– Octree size.

Once you have input the corresponding threshold for one of the Decimation filter options (target points, octree size, etc.), you can either apply the filter to the selected point cloud or clone and filter it. The latter is suitable for keeping the original dense point cloud while using the filter to the copy.

Densification: the point cloud will be regenerated by increasing the points according to the specified value. Only structured point clouds can be densified.

Project on the regular grid: this filter leverages a regular grid as a projection for the dense point cloud so that points are at a regular distance. You can define the grid spacing as well as the grid research value for the grid generation. This tool affects surveying, mapping, mining, and construction scenarios.

Ground Extraction Filter: allows the automatic extraction of the terrain from a dense point cloud to create a 3D Digital Terrain Model (DTM) and eliminate everything above, such as trees and houses. Users can select the dense point cloud and set the following parameters:

– Scene: indicates the type of the 3D reconstructed scenario;

– Resolution: refers to the grid size of cloth used to cover the terrain. The bigger the resolution, the coarser the DTM;

– Height threshold: refers to a threshold to classify the point clouds into the ground and non-ground parts based on the distances between points and the simulated terrain.

Classification: this filter automatically identifies different scene objects, allowing users to choose from three categories: buildings, roads, and trees. It works only with dense point clouds generated from aerial datasets and integrates the ground extraction filter, which is enabled by default. Once complete, a new dense cloud for each selected object category will be added to the workspace.

Update colors: this filter allows for re-computing dense point cloud colors:

– From images: (e.g., to change the workspace pictures if you are dealing with multispectral imagery);

– By elevation: it updates the colors according to the elevation of the points. A list of color maps allows you to pick among several color palettes;

– By normals: using normal maps from the dataset images;

– Uniform: choosing one specific color from a color palette;

– By confidence: different sets of colors can be applied, depending on the confidence derived from the points targeted by the cameras.

· Mesh Filters

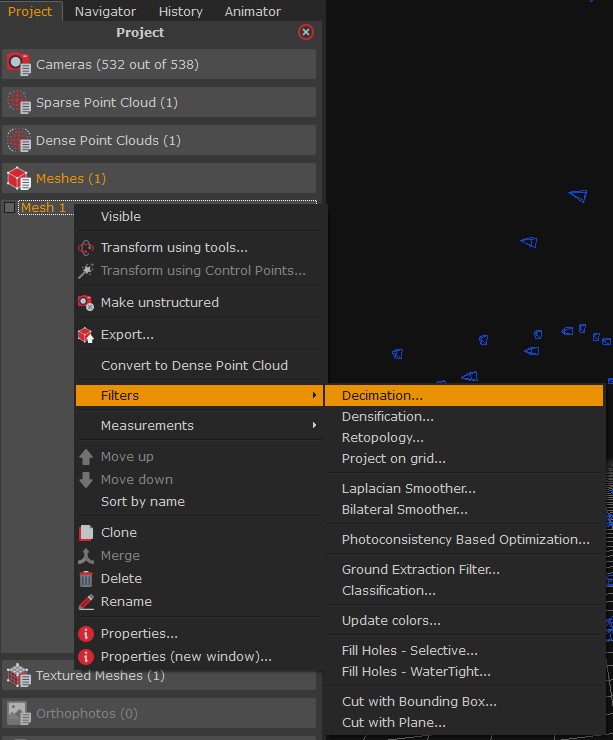

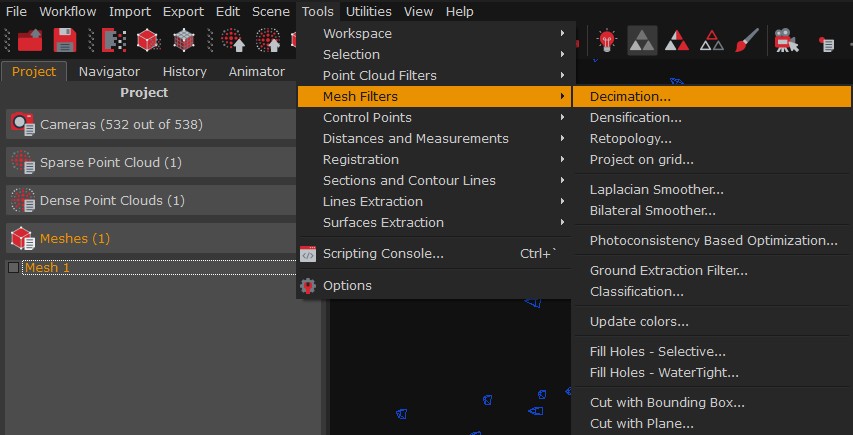

You can access the mesh filters:

- By right-clicking the mesh name in the Project panel and selecting the Filters option;

2. By clicking the Tools menu and selecting the “Mesh Filters” option;

3. From the Stereo Meshes section in the Filtering module of the Editing panel.

Below you can find a description of the different filters:

Decimation: allows for selecting the desired mesh from the dropdown menu and setting the number of corresponding vertices. The mesh will be regenerated by decimating the number of vertices with the specified maximum value.

Available decimation options:

– Preserve the boundaries: the edge vertices are never decimated. Therefore, it allows for an edge equal to the initial mesh; the drawback is that the edge remains much denser at the expense of the inner part, resulting in fewer points and less detail;

– Constraint distances from the input mesh: it uses an extra constraint for selecting the vertices to be decimated, which is based on the distance from the starting mesh.

Users can either apply the filter to the selected mesh or clone it and filter it.

Densification: the mesh will be regenerated by densifying the number of vertices with the specified maximum value. You can either apply the filter to the selected mesh or clone it and filter the mesh copy.

Retopology: this filter allows you to optimize the mesh topology. Triangles will be simplified and Zephyr will try to generate larger triangles where possible. The greater the optimization factor, the larger the size of the generated triangles. Please note that the Retopology filter may cause a loss of mesh detail, especially in 3D geometries with well-defined edges.

Project on the regular grid: tthis filter leverages a regular grid as a projection for the mesh so that points are at a regular distance. You can define the grid spacing as well as the grid research value for the grid generation. This tool affects surveying, mapping, mining, and construction scenarios.

Laplacian smoother: reduces the mesh noise and does not keep sharp edges. The more iterations are set, the smoother the mesh. This filter best suits non-edged subjects (e.g., human body datasets).

Bilateral smoother: reduces the 3D geometry noise by improving and emphasizing edges where possible. This filter is suitable for urban and construction scenarios.

Ground Extraction: allows the automatic terrain extraction from a mesh to create a 3D Digital Terrain Model (DTM). It deletes vertical objects, such as trees and buildings. Users can select the mesh and set the following parameters:

– Scene: indicates the type of the 3D reconstructed scenario;

– Resolution: refers to the grid size of cloth used to cover the terrain. The bigger the resolution, the coarser the DTM;

– Height threshold: refers to a threshold to classify the point clouds into the ground and non-ground parts based on the distances between points and the simulated terrain.

Classification: this filter automatically identifies different scene objects, allowing users to choose from three categories: buildings, roads, and trees. It works only with dense point clouds generated from aerial datasets and integrates the ground extraction filter, which is enabled by default. Once complete, a new dense cloud for each selected object category will be added to the workspace.

Update colors: this filter allows re-computing mesh colors:

– From images: (e.g., to change the workspace pictures if you are dealing with multispectral imagery);

– By elevation: it updates the colors according to the elevation of the points. A list of color maps allows you to pick among multiple color palettes;

– By normals: using normal maps from the dataset images;

– Uniform: choosing one specific color from a color palette;

– By curvature: different sets of colors can be applied according to the local geometry, which means the color varies depending on whether the area is flat, angular, or curved.

Fill Holes Selective or WaterTight:

Two modes are available:

– Watertight: allows for filling all the holes at once automatically;

– Selective: allows for filling the holes manually in the selection window. Users can select a specific hole by choosing the corresponding number with the pick hole button (the hole will be The color of the triangles closing the hole will most likely be wrong when the missing visibility of the point from the camera behaves degenerated (and it is impossible to identify the color of something not seen by any picture). The Selective mode allows for closing single holes faster but may not work for complex cases for which the Watertight mode is recommended.

Cut with plane: this function allows for cutting the mesh sharply using a plane, regenerating the triangles on the edge where cut.

Cut with bounding box: this function allows for cutting the mesh using the bounding box.

Please note that some filters (Decimation, Laplacian and Bilateral smoothers, Retopology, and Update colors) can also be applied to a portion of the mesh. Users must first select the desired mesh area (using the selection tools) and enable the “filter only selected triangles” option in the above-mentioned filters window before running the filter.

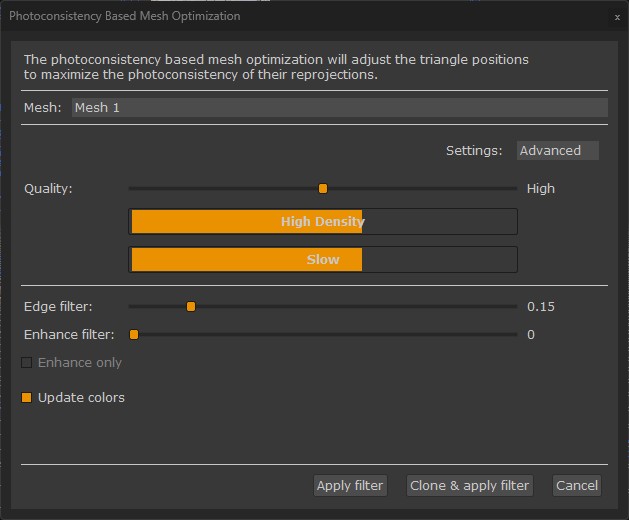

· Photoconsistency Based Mesh Optimization:

Photoconsistency is a photo-based filter that optimizes a 3D mesh to make it as consistent as possible with the starting images through a minimization process where the surface is modified at each iteration. That reduces the reprojection error of one image on the other, thus getting extra detail on the 3D geometry.

Photoconsistency-based mesh optimization can be applied with different modes to meshes:

- 1. Photoconsistency can be started directly in the mesh reconstruction phase. This function is disabled in some presets but can still be enabled in advanced settings. The default Photoconsistency parameters can change depending on the selected presets.

- 2. Another way to run the Photoconsistency is through the Tools menu > Mesh Filters > Photoconsistency-based Optimization.

- 3. The same Photoconsistency parameters can be run from the Editing tab > Filtering > Photoconsistency.

Photoconsistency parameters are described below:

– Quality: determines the balance between processing time and result fidelity. Higher-quality steps improve details and generate higher-density meshes but take longer, while lower steps are faster and generally more noise-resilient for low-quality datasets;

– Enhance filter: a post-processing method that can generate “fake details” by increasing the number of mesh triangles. Depending on the result you want to obtain and the photo’s quality, you need to carefully adjust the slider to avoid creating additional noise instead of additional details;

– Edge filter: this parameter emphasizes edges; the more the mesh is angular (buildings, objects, etc.), the more you can increase this value. Usually, the value can be set to 0.15;

– Update colors: calculates vertex colors according to the cameras. It recalculates the colors each time after making the Photoconsistency.